A Simple Artificial Neuron

Do you want to develop your of artificial intelligence application from the scratch ? Want to learn how you can develop a simple artificial neuron model in C++? In this post we will explain with a very simple artificial neuron example.

A Minimum Artificial Neuron has an activation value (a), an activation function ( phi() ) and weighted (w) input net links. So it has one activation value, one activation function and one or more weights depends on number of it’s input nets.

Activation value (a) is a result of activation function also called as transfer function or threshold.

Activation Function ( phi() ) also called as transfer function, or threshold function that determines the activation value ( a = phi(sum) ) from a given value (sum). Here sum is a sum of signals in their weights. In another terms. Activation function is a way to transfer the sum of all weighted signals to a new activation value of that signal. There are different activation functions, mostly Linear (Identity) , bipolar and logistic (sigmoid) function are used. Activation function and it’s types are explained well here.

Weights are weight of a input signal for that neuron. Every net link has a link affecting to other neurons sum.

It is analogous to the axon of a biological neuron, and its value propagates to the input of the next layer, through a synapse. In Artificial Intelligence Theory the main and the core formula that simulates all neural activity is described with one signal formula. Activation function (transfer function), transfers sum input to a activation value as given below,

In some old articles here sum showed as a transfer function or result of a transfer function, now most articles uses activations function as transfer function, both same. It transfers given sum to activation value. So sum can be a result of a sum() function but not a a result of a transfer function, it will be used in a transfer function (activation function) to define activation output. This formula calculates the current activation value of an artificial neuron with activation function corresponding with the sum of activations in their weights. In this formula,,

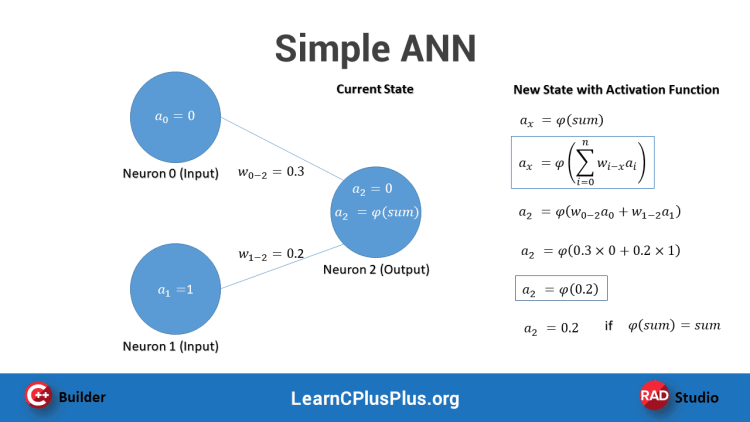

From the this very brief theory, we can create a simple neuron model as below used in a simple ANN example,

Very Simple Artificial Neural Network Example in C++

Here below we prepared a a very simple Artificial Neural Network example with an Activation Function given,

In this ANN example we have 2 input neurons and one output neuron. Neuron 0 and Neuron 1 are connected with Neuron 2. Each neuron will have their activation value (a) and each of the links between neurons will have weights. So output neuron will be the result of activation of the sum of 2 input neuron activations multiplied in their weights.

Output of the neuron may have a value in this current state. We should calculate it’s new activation value by the activation function phi(). Let’s define a simple linear activation function

|

1 2 3 4 5 6 |

float phi(float sum) { return sum ; // linear transfer function f(sum)=sum } |

Here we assume that our network was trained before, that means weights of net links are known. Let’s define and initialize our all neural activation and weight values,

|

1 2 3 4 5 |

float a0 = 0.0, a1 = 1.0, a2 = 0; float w02 = 0.3, w12 = 0.2; |

Note that, the number of neural net links are not same with number of neurons. That means, links and weights can be handled in another array. We defined a2 before and we set it to 0 which is not correct in this network, our ANN should be stable, correct ! So we need to calculate the activation value of the output with these inputs. We should use our activation function with the sum of incoming signals in their weights.

|

1 2 3 |

a2 = phi(a0*w02 + a1*w12); |

As you see, this simple equation shows that activation value of an artificial neuron depends on activation values of input neurons and weights of input links.

We think that, this example above was the simplest artificial neuron and ANN example in C++ on the internet. It is good to understand how a simple neuron simulation works. Let’s sum all in this full example below,

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 |

#include <iostream> // let's define a transfer function for the output neuron float phi(float sum) { return sum ; // linear transfer function f(sum)=sum } int main() { //let's define activity of two input neurons (a0, a1) and one output neuron (a2) float a0 = 0.0, a1 = 1.0, a2 = 0; //let's define weights of signals comes from two input neurons to output neuron (0 to 2 and 1 to 2) float w02 = 0.3, w12 = 0.2; // Let's fire our artificial neuron to obtain activity by the transfer function, output will be std::cout << "Firing Output Neuon ...\n" ; a2 = phi(a0*w02 + a1*w12); std::cout << "Output Neuron Activation Value:" << a2 << '\n'; getchar(); return 0; } |